Now Reading: OpenAI’s Sam Altman Highlights Massive GPU Needs Beyond a Single Data Center

-

01

OpenAI’s Sam Altman Highlights Massive GPU Needs Beyond a Single Data Center

OpenAI’s Sam Altman Highlights Massive GPU Needs Beyond a Single Data Center

### Quick Summary

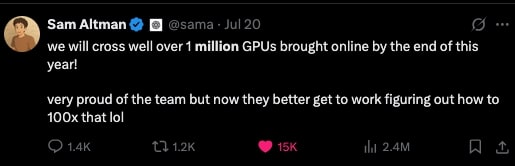

– Sam Altman, CEO of OpenAI, claimed in July 2025 that OpenAI would bring “well over 1 million GPUs online by the end of this year.”

– The claim seems too refer to aggregate GPU capacity across partnerships instead of a single facility or data center.

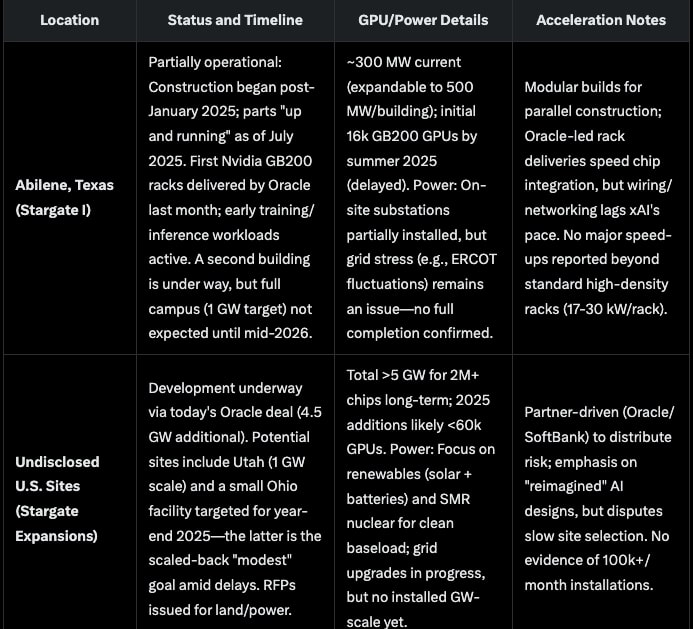

– Stargate’s main location in Texas is expected to house 400,000 chips by mid-2026. Current execution timelines suggest scaled-back near-term plans and delays for Stargate’s hyperscale facilities.

– Between 30,000 and 60,000 new GPUs might realistically be added in 2025 through partnerships like Microsoft Azure and Oracle Cloud.

– Competitors such as xAI’s Colossus have achieved highly efficient single-cluster GPU deployments (~100k GPUs wiht unified memory), outperforming OpenAI’s distributed datacenter approach which is hindered by network latency issues.

– Other notable players include Meta (expanding from ~100k GPUs to over 300k), Google using TPUs (scaled but less coherent configuration), and china’s DeepSeek (large-scale but fragmented technology).

Images: