Now Reading: Breakthrough Proof Reduces Computational Space Requirements

-

01

Breakthrough Proof Reduces Computational Space Requirements

Breakthrough Proof Reduces Computational Space Requirements

Quick Summary

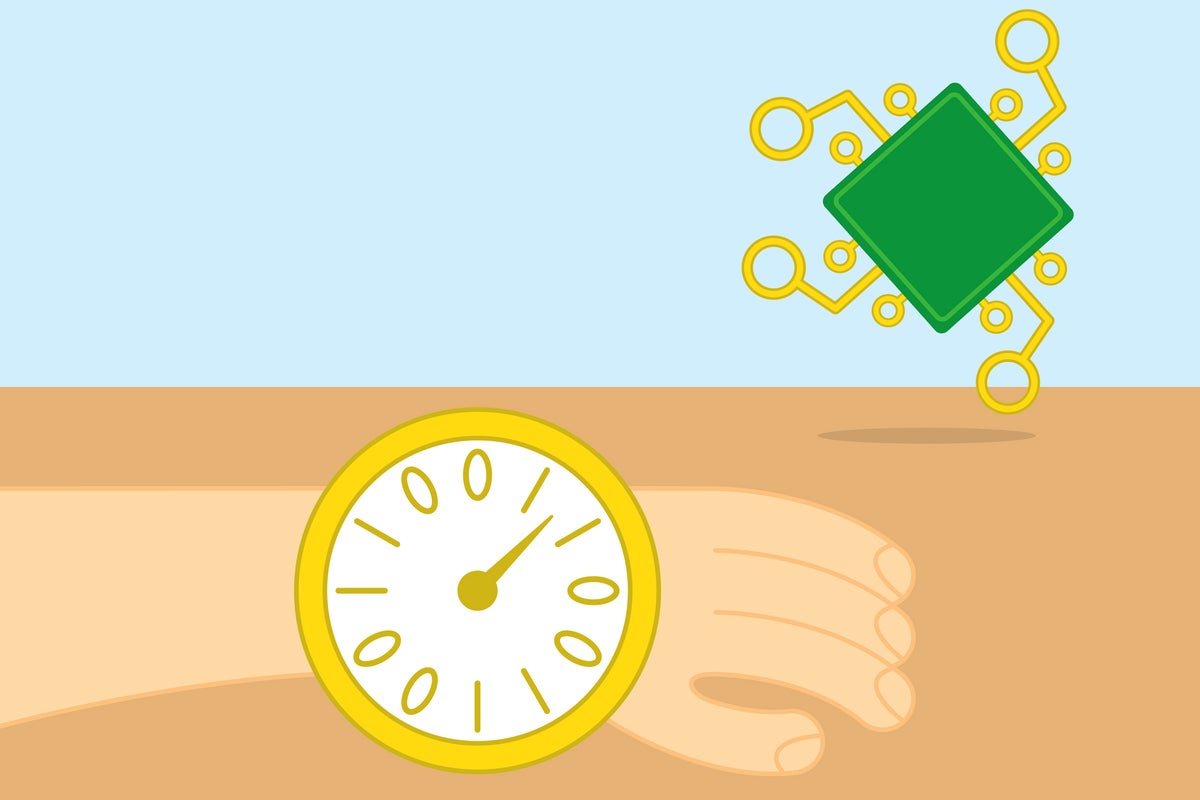

- Computation theory has long assumed that solving a problem in t steps requires approximately t bits of memory.

- Ryan Williams, an MIT computer scientist, presented groundbreaking research challenging this assumption at the ACM Symposium on Theory of Computing.

- He demonstrated that problems solvable in time t need onyl about √(t) bits of memory-a meaningful reduction from previous beliefs.

- The breakthrough relies on “reductions,” which transform one problem into another mathematically equivalent problem to optimize space usage.

- Mahdi Cheraghchi from the university of Michigan called the finding “unbelievable” and regards it as an crucial step forward in understanding computational efficiency.

- This result emphasizes using space wisely rather than simply increasing available memory.

Indian Opinion Analysis

Ryan Williams’s revelation has potential relevance for India, where advancements in computing can aid major sectors such as education technology, healthcare digitization, and scalable infrastructure for government services like Aadhaar or UPI. Reducing the computational space needed may enable inexpensive hardware solutions tailored for resource-constrained environments. As India becomes increasingly dependent on digital platforms to support its socio-economic growth,optimizing computational models can bolster efforts toward accessible technology without compromising efficiency. This breakthrough underscores the importance of theoretical research guiding practical applications relevant to emerging economies like India.