Now Reading: Why Sharing Your PIN With AI Chatbots Is a Risky Move

-

01

Why Sharing Your PIN With AI Chatbots Is a Risky Move

Why Sharing Your PIN With AI Chatbots Is a Risky Move

Speedy summary

- The article covers ChatGPT’s use case where users request advice on resetting forgotten PIN codes.

- A user sought guidance on recovering access to their account after forgetting the associated PIN code and was issued tailored advice via chatgpt’s responses.

- This example highlights the potential applications of AI in troubleshooting everyday technical issues effectively.

Indian Opinion Analysis

ChatGPT’s ability to assist users with minor yet crucial problems, such as forgotten PIN codes, underscores the expanding scope of AI technology in day-to-day life. As India increasingly embraces digital tools across sectors-from banking to government services-such applications can enhance accessibility for millions. However, reliance on AI for sensitive matters like PIN recovery also raises concerns about data privacy and security, given India’s diverse cybersecurity landscape. The importance for India lies in balancing innovation with regulations that ensure responsible use while promoting tech inclusivity.

Read more: Source linkQuick Summary

- Raw text and details of the article are not provided for processing. Please ensure the complete news source is included for accurate analysis.

Indian Opinion Analysis

- Without specific content in the raw text, analysis cannot be carried out. Kindly provide all relevant details of the news story so a factual, logical, neutral analysis can be drafted.

quick Summary

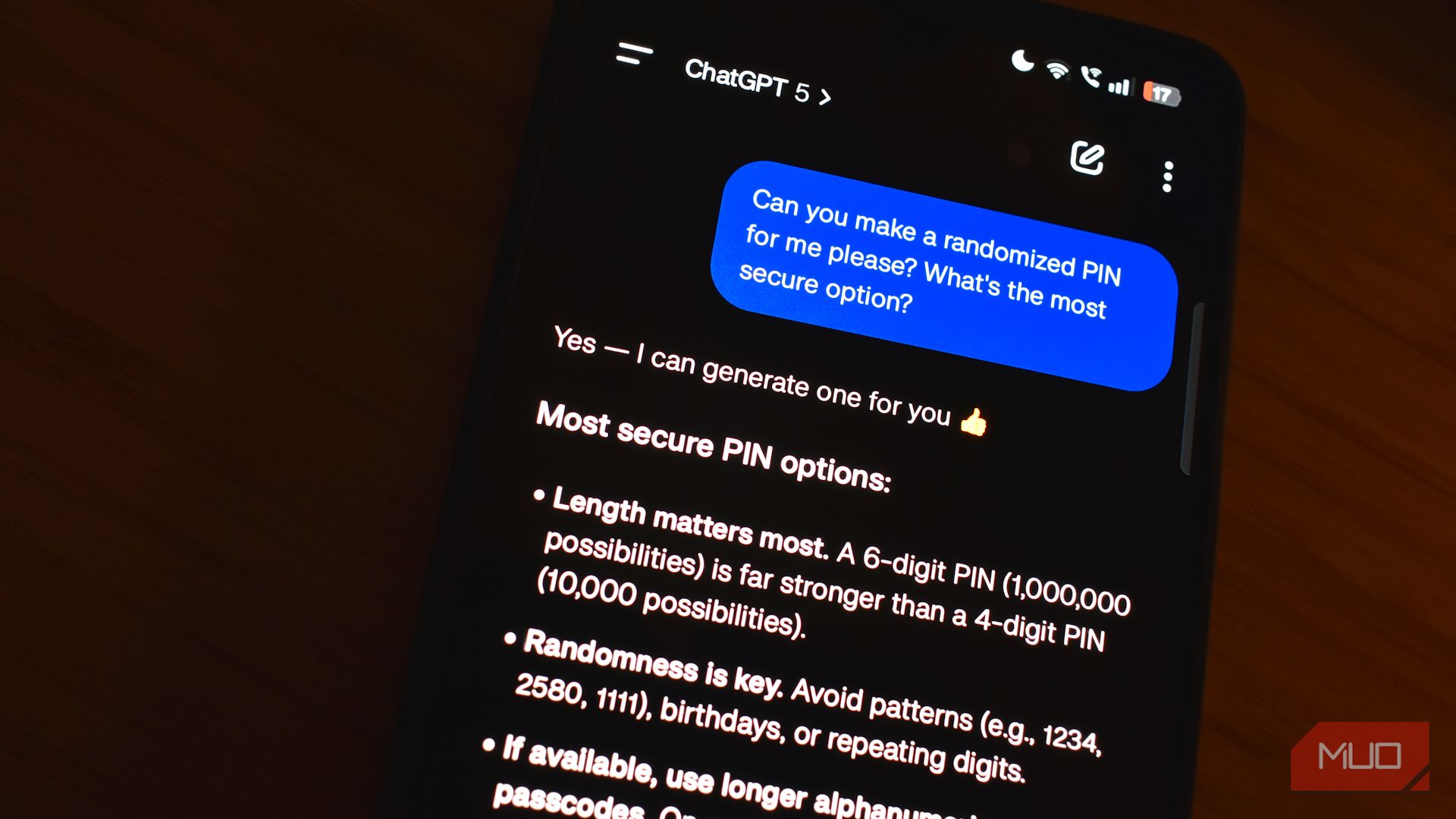

- The article recounts a user’s experience of inadvertently sharing sensitive information, including their Windows PIN, with ChatGPT through its Memory feature.

- The user had switched to ChatGPT as their default voice assistant and casually asked the AI to save their PIN during a technical troubleshooting session.

- Upon reviewing ChatGPT’s Memory logs, the user found it had stored more personal details beyond the PIN-such as habits and preferences-raising privacy concerns.

- The Windows PIN provides access far beyond just unlocking a device; it can enable access to saved browser passwords, integrated password managers, two-factor authentication (2FA) systems like Microsoft Authenticator, and other critical applications tied to Windows Hello integration.

- The user emphasizes how AI assistants can unintentionally compile sensitive data over time through casual interactions.

Indian Opinion Analysis

The incident highlights an critically important concern: India’s increasing reliance on AI-powered assistants raises privacy risks that demand regulatory scrutiny and public awareness campaigns for safe practices while engaging with such technologies. As India continues its digital transformation journey, cybersecurity frameworks must evolve to address vulnerabilities from inadvertent data sharing with intelligent software like ChatGPT or similar platforms. Users should be encouraged to critically evaluate permissions granted to AI tools while being aware of what information they intentionally or unintentionally share in everyday interactions.

This case also underscores the critical need for robust education around devices that use centralized authentication systems (like Windows Hello). For Indian citizens who use these technologies widely-for work-from-home setups or online banking-the implications could extend beyond personal consequences into national cybersecurity risks without proper safeguards.

read more: Original Article

Quick Summary:

- The article discusses the author’s accidental disclosure of their Windows PIN to an AI system, causing concerns about security and potential account takeovers due to interconnected systems.

- Immediate actions taken included changing the PIN, moving critical passwords to a standalone password manager, and using hardware security keys.

- The author audited ChatGPT’s Memory settings to erase stored sensitive information and now uses Temporary Chat (“incognito mode”) for technical discussions.

- Temporary Chat prevents data from being saved in Memory or contributing to AI training, offering enhanced privacy options.

- According to the article, while AI memory features are useful, they necessitate careful management akin to other systems storing personal data.

Image 1: Auditing chatgpt’s Memory settings.

Image 2: Using Temporary Chat on ChatGPT.

Indian Opinion Analysis:

While this incident highlights a personal vulnerability stemming from casual interaction with an AI system, it underscores broader implications for India amidst increasing adoption of AI technology across sectors. As India advances its digital transformation journey-incorporating tools like generative AI into offices and public services-the necessity for robust cybersecurity measures becomes paramount.

This scenario illustrates how even seemingly harmless disclosures can cascade into broader issues if proper precautions aren’t followed. Institutions must prioritize digital literacy campaigns targeting individuals and businesses alike while ensuring clear mechanisms for auditing sensitive data stored by such platforms.For India’s burgeoning tech sector and policymaking initiatives surrounding data privacy (e.g., Digital Personal Data Protection Act of 2023), this case emphasizes organizing protocols around managing interactions between users and intelligent systems where memory retention is involved. Striking a balance between utility benefits offered by these platforms (like personalized experiences) versus safeguarding against inadvertent vulnerabilities will likely define future discourse in shaping India’s digital ecosystem responsibly.